What if ChatGPT is Actually a Tour Guide From Another World? (Part 2)

I tested a hunch and stumbled upon something beautiful and mysterious inside GPT.

Part I of this post hypothesized that ChatGPT is a tour guide leading us through a high-dimensional version of the computer game Minecraft.

Outrageous? Absolutely, but I tested the hypothesis anyway and discovered something mysterious and beautiful deep inside GPT. Here’s what I found and the steps I took to uncover it.

To begin, let’s clarify what we mean by “dimension.” Then we’ll collect dimensional data from GPT-4 and compare it to Minecraft. Finally, just for fun, we’ll create a Minecraft world that uses actual GPT-4 data structures and see how it looks.

To clarify ‘dimension,’ consider this quote:

“I think it's important to understand and think about GPT-4 as a tool, not a creature, which is easy to get confused...”—Sam Altman, CEO of OpenAI, testimony before the Senate Judiciary Subcommittee on Privacy, Technology (May 16, 2023)

Horse or hammer? We could just ask ChatGPT. However, the answer will hinge on ChatGPT’s level of self-awareness, which itself depends on how creature-like it is, creating a catch-22.

Instead, we'll use our intuition and look at the problem in different dimensions. Dimensions are a measurable extent of some kind. For example in a “tool” dimension, a hammer seems more “tool-like” than a horse. In two dimensions, it’s a similar story. Horses are both more creature-like and less tool-like than hammers.

Where does GPT fit in? Probably closer to the hammer in both cases.

What if we add a third dimension called “intelligence?” Here’s where things get interesting. Horses are smarter than a bag of hammers and GPT seems pretty smart too. So, in these three dimensions GPT may actually be somewhere between a horse and a hammer.

Visualizing two dimensions is easy, three dimensions is a little harder but there’s no reason we couldn’t describe horses and hammers in thousands of dimensions. In fact there are good reasons to do this because measuring things across multiple dimensions enhances understanding. The marvel of GPT is it seems to have plotted not just horses and hammers but almost everything there is in thousands of dimensions!

But how does GPT represent things in thousands of dimensions?

With something called embeddings.

Embeddings are a way to convert words, pictures and other data into a list of numbers so computers can grasp their meanings and make comparisons.

Let's say we wanted to have a computer grasp the meaning of apples and lemons. Assigning a number to each fruit might work, but fruits are more complex than a single number. So, we use a list of numbers, where each number says something like how it looks, how it tastes, and the nutritional content. These lists are embeddings and they help ChatGPT know that apples and lemons are both fruits but taste different.

Sadly, GPT embeddings defy human comprehension and visualization. For example, three thousand embeddings for just the word “apple” look like this:

Is it possible to reduce the number of dimensions without compromising the overall structure? Fortunately, this sort of thing happens all the time—on a sunny day, your shadow is a two-dimensional representation of your three-dimensional body. There are fancy ways of performing reductions mathematically, but we’re going to keep things really simple and just take the first three embeddings that OpenAI gives us and throw away the rest.

Could this possibly work?

Let’s find out. We’ll kick things off by selecting a few words to experiment with: horse, hammer, apple, and lemon.Then, to keep things interesting, we’ll also pick a few words and phrases that may (or may not) be semantically connected: “cinnamon,” “given to teachers,” “pie crust,” “hangs from a branch,” and “crushed ice.”

Next, we’ll look up their embeddings. OpenAI makes this easy with something called an embedding engine. You give it a word or phrase and it returns a list of three thousand embeddings (3,072 to be exact).

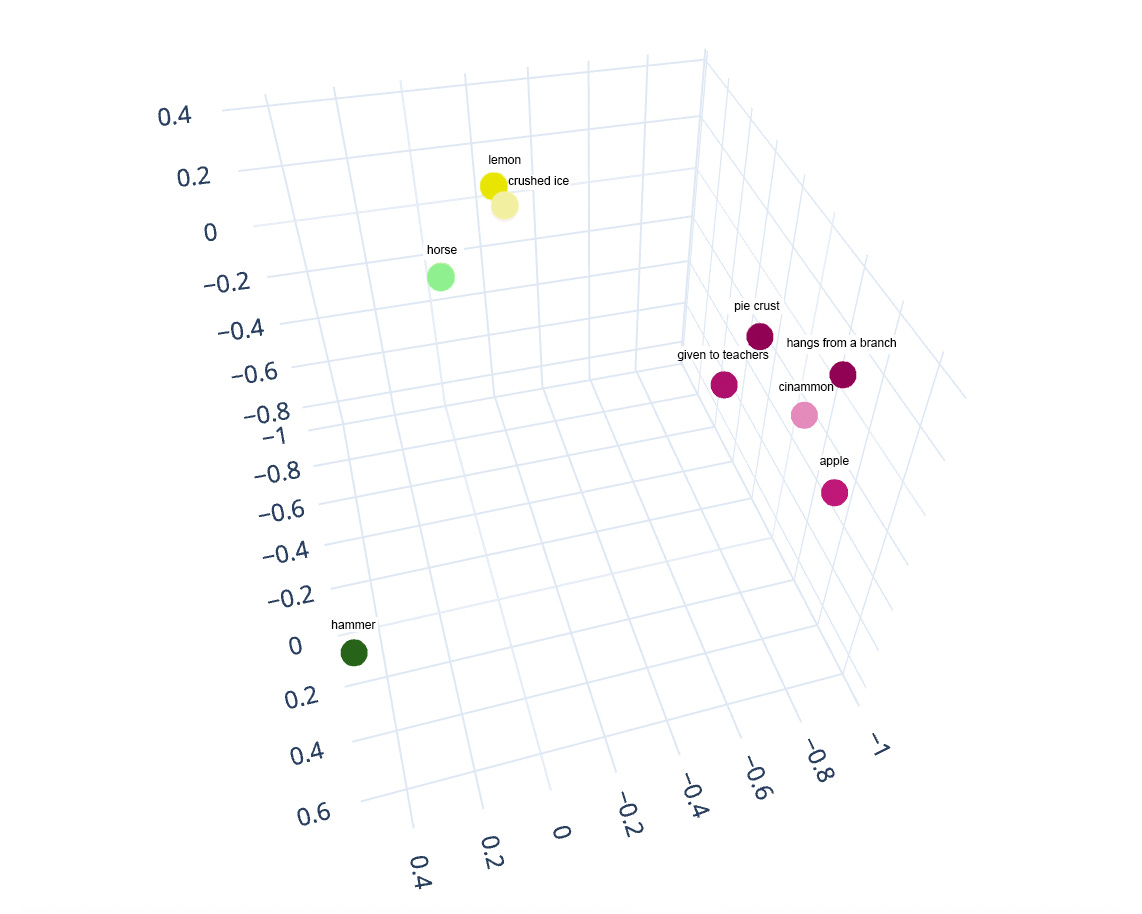

Using a snippet of code we’ll take the first three embeddings for each word and discard the rest. Here’s the result:

What exactly are these numbers? If we're being honest, nobody really knows; they seem to pinpoint the location of each word and phrase within a specific, somewhat mysterious dimension inside GPT. For our purposes, let’s treat the embeddings as though they were x, y, z coordinates. This approach requires an astoundingly audacious leap of faith, but we won’t dwell on that—instead, we'll plot them on a graph and see what emerges.

Do you see it?!!

John Firth would be proud. Apple-ish things seem to be neighbors (ready to make a pie). Crushed ice and lemons are next to each other (ready to make lemonade). Hammer is off in a corner.

If you're not completely blown away by this result, maybe it's because you’re a data scientist who's seen it all before. For me, I can’t believe what just happened: we looked up the embeddings for nine words and phrases, discarded 99.9% of the data, and then plotted the remaining bits on a 3D graph—and amazingly, the locations make intuitive sense!

Still not astonished? Then perhaps you're wondering how all this relates to Minecraft. For the gamers, we're about to take the analysis one step further.

Using Minecraft Classic, we’ll build an 8 x 8 x 8 walled garden, then “plot” the words and phrases just like we did in the 3D graph. Here’s what that looks like:

Notice that the positions of the words and phrases in the garden match those in the 3D graph. That’s because embeddings act like location coordinates in a virtual world—in this case, Minecraft. What we’ve done is take a 3,072-dimensional embedding space and reduce it down to a three-dimensional 'shadow' space in Minecraft, which can then be explored like this:

Who's the explorer leaping through our garden? That's ChatGPT, the high-dimensional docent, fluent in complex data structures—our emissary to the elegant and mysterious world of GPT. When we submit a prompt, it is ChatGPT who discerns our intent (no small feat, using something called attention mechanisms), then glides effortlessly through thousands of dimensions to lead us to exactly the right spot in the GPT universe.

Does all this mean ChatGPT is actually a tour guide from another world? Is it actually operating inside a high-dimensional game space? While we can't say for certain, GPT does seem more game-like than either a horse or a hammer:

Images by author unless otherwise noted.

—

References:

"API Reference." OpenAI, [4/4/2024]. https://platform.openai.com/docs/api-reference.

Sadeghi, Zahra, James L. McClelland, and Paul Hoffman. "You shall know an object by the company it keeps: An investigation of semantic representations derived from object co-occurrence in visual scenes." Neuropsychologia 76 (2015): 52-61.

Balikas, Georgios. "Comparative Analysis of Open Source and Commercial Embedding Models for Question Answering." Proceedings of the 32nd ACM International Conference on Information and Knowledge Management. 2023.

Hoffman, Paul, Matthew A. Lambon Ralph, and Timothy T. Rogers. "Semantic diversity: A measure of semantic ambiguity based on variability in the contextual usage of words." Behavior research methods 45 (2013): 718-730.

Brunila, Mikael, and Jack LaViolette. "What company do words keep? Revisiting the distributional semantics of JR Firth & Zellig Harris." arXiv preprint arXiv:2205.07750 (2022).

Gomez-Perez, Jose Manuel, et al. "Understanding word embeddings and language models." A Practical Guide to Hybrid Natural Language Processing: Combining Neural Models and Knowledge Graphs for NLP (2020): 17-31